derfinder users guide

Leonardo Collado-Torres

Lieber Institute for Brain Development, Johns Hopkins Medical CampusCenter for Computational Biology, Johns Hopkins Universitylcolladotor@gmail.com

13 December 2024

Source:vignettes/derfinder-users-guide.Rmd

derfinder-users-guide.RmdAsking for help

Please read the basics

of using derfinder in the quick start guide. Thank

you.

derfinder users guide

If you haven’t already, please read the quick start to using derfinder vignette. It explains the basics of using derfinder, how to ask for help, and showcases an example analysis.

The derfinder users guide goes into more depth about what you can do with derfinder. It covers the two implementations of the DER Finder approach (Frazee, Sabunciyan, Hansen, Irizarry, and Leek, 2014). That is, the (A) expressed regions-level and (B) single base-level F-statistics implementations. The vignette also includes advanced material for fine tuning some options, working with non-human data, and example scripts for a high-performance computing cluster.

Expressed regions-level

The expressed regions-level implementation is based on summarizing the coverage information for all your samples and applying a cutoff to that summary. For example, by calculating the mean coverage at every base and then checking if it’s greater than some cutoff value of interest. Contiguous bases passing the cutoff become a candidate Expressed Region (ER). We can then construct a matrix summarizing the base-level coverage for each sample for the set of ERs. This matrix can then be using with packages developed for feature counting (limma, DESeq2, edgeR, etc) to determine which ERs have differential expression signal. That is, to identify the Differentially Expressed Regions (DERs).

regionMatrix()

Commonly, users have aligned their raw RNA-seq data and saved the alignments in BAM files. Some might have chosen to compress this information into BigWig files. BigWig files are much faster to load than BAM files and are the type of file we prefer to work with. However, we can still identify the expressed regions from BAM files.

The function regionMatrix() will require you to load the

data (either from BAM or BigWig files) and store it all in memory. It

will then calculate the mean coverage across all samples, apply the

cutoff you chose, and determine the expressed regions.

This is the path you will want to follow in most scenarios.

railMatrix()

Our favorite method for identifying expressed regions is based on pre-computed summary coverage files (mean or median) as well as coverage files by sample. Rail is a cloud-enabled aligner that will generate:

- Per chromosome, a summary (mean or median) coverage BigWig file. Note that the summary is adjusted for library size and is by default set to libraries with 40 million reads.

- Per sample, a coverage BigWig file with the un-adjusted coverage.

Rail does a great job in creating these

files for us, saving time and reducing the memory load needed for this

type of analysis with R.

If you have this type of data or can generate it from BAM files with

other tools, you will be interested in using the

railMatrix() function. The output is identical to the one

from regionMatrix(). It’s just much faster and memory

efficient. The only drawback is that BigWig files are not fully

supported in Windows as of rtracklayer

version 1.25.16.

We highly recommend this approach. Rail has also other significant features such as: scalability, reduced redundancy, integrative analysis, mode agnosticism, and inexpensive cloud implementation. For more information, visit rail.bio.

Single base-level F-statistics

The DER Finder approach was originally implemented by calculating t-statistics between two groups and using a hidden markov model to determine the expression states: not expressed, expressed, differentially expressed (Frazee, Sabunciyan, Hansen et al., 2014). The original software works but had areas where we worked to improve it, which lead to the single base-level F-statistics implementation. Also note that the original software is no longer maintained.

This type of analysis first loads the data and preprocess it in a format that saves time and reduces memory later. It then fits two nested models (an alternative and a null model) with the coverage information for every single base-pair of the genome. Using the two fitted models, it calculates an F-statistic. Basically, it generates a vector along the genome with F-statistics.

A cutoff is then applied to the F-statistics and contiguous base-pairs of the genome passing the cutoff are considered a candidate Differentially Expressed Region (DER).

Calculating F-statistics along the genome is computationally intensive, but doable. The major resource drain comes from assigning p-values to the DERs. To do so, we permute the model matrices and re-calculate the F-statistics generating a set of null DERs. Once we have enough null DERs, we compare the observed DERs against the null DERs to calculate p-values for the observed DERs.

This type of analysis at the chromosome level is done by the

analyzeChr() function, which is a high level function using

many other pieces of derfinder.

Once all chromosomes have been processed, mergeResults()

combines them.

Which implementation of the DER Finder approach you will want to use depends on your specific use case and computational resources available. But in general, we recommend starting with the expressed regions-level implementation.

Example data

In this vignette we we will analyze a small subset of the samples from the BrainSpan Atlas of the Human Brain (BrainSpan, 2011) publicly available data.

We first load the required packages.

## Load libraries

library("derfinder")

library("derfinderData")

library("GenomicRanges")Phenotype data

For this example, we created a small table with the relevant phenotype data for 12 samples: 6 from fetal samples and 6 from adult samples. We chose at random a brain region, in this case the amygdaloid complex. For this example we will only look at data from chromosome 21. Other variables include the age in years and the gender. The data is shown below.

library("knitr")

## Get pheno table

pheno <- subset(brainspanPheno, structure_acronym == "AMY")

## Display the main information

p <- pheno[, -which(colnames(pheno) %in% c(

"structure_acronym",

"structure_name", "file"

))]

rownames(p) <- NULL

kable(p, format = "html", row.names = TRUE)| gender | lab | Age | group | |

|---|---|---|---|---|

| 1 | F | HSB97.AMY | -0.4423077 | fetal |

| 2 | M | HSB92.AMY | -0.3653846 | fetal |

| 3 | M | HSB178.AMY | -0.4615385 | fetal |

| 4 | M | HSB159.AMY | -0.3076923 | fetal |

| 5 | F | HSB153.AMY | -0.5384615 | fetal |

| 6 | F | HSB113.AMY | -0.5384615 | fetal |

| 7 | F | HSB130.AMY | 21.0000000 | adult |

| 8 | M | HSB136.AMY | 23.0000000 | adult |

| 9 | F | HSB126.AMY | 30.0000000 | adult |

| 10 | M | HSB145.AMY | 36.0000000 | adult |

| 11 | M | HSB123.AMY | 37.0000000 | adult |

| 12 | F | HSB135.AMY | 40.0000000 | adult |

Load the data

derfinder

offers three functions related to loading raw data. The first one,

rawFiles(), is a helper function for identifying the full

paths to the input files. Next, loadCoverage() loads the

base-level coverage data from either BAM or BigWig files for a specific

chromosome. Finally, fullCoverage() will load the coverage

for a set of chromosomes using loadCoverage().

We can load the data from derfinderData

(Collado-Torres, Jaffe, and Leek, 2024) by first identifying the paths

to the BigWig files with rawFiles() and then loading the

data with fullCoverage(). Note that the BrainSpan

data is already normalized by the total number of mapped reads in each

sample. However, that won’t be the case with most data sets in which

case you might want to use the totalMapped and

targetSize arguments. The function

getTotalMapped() will be helpful to get this

information.

## Determine the files to use and fix the names

files <- rawFiles(system.file("extdata", "AMY", package = "derfinderData"),

samplepatt = "bw", fileterm = NULL

)

names(files) <- gsub(".bw", "", names(files))

## Load the data from disk

system.time(fullCov <- fullCoverage(

files = files, chrs = "chr21",

totalMapped = rep(1, length(files)), targetSize = 1

))## 2024-12-13 15:14:13.312856 fullCoverage: processing chromosome chr21## 2024-12-13 15:14:13.324585 loadCoverage: finding chromosome lengths## 2024-12-13 15:14:13.344693 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB113.bw## 2024-12-13 15:14:13.490278 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB123.bw## 2024-12-13 15:14:13.6441 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB126.bw## 2024-12-13 15:14:13.727383 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB130.bw## 2024-12-13 15:14:13.806863 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB135.bw## 2024-12-13 15:14:13.876209 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB136.bw## 2024-12-13 15:14:13.964846 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB145.bw## 2024-12-13 15:14:14.045591 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB153.bw## 2024-12-13 15:14:14.130819 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB159.bw## 2024-12-13 15:14:14.213469 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB178.bw## 2024-12-13 15:14:14.291178 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB92.bw## 2024-12-13 15:14:14.389308 loadCoverage: loading BigWig file /__w/_temp/Library/derfinderData/extdata/AMY/HSB97.bw## 2024-12-13 15:14:14.500588 loadCoverage: applying the cutoff to the merged data## 2024-12-13 15:14:14.512845 filterData: normalizing coverage## 2024-12-13 15:14:14.722615 filterData: done normalizing coverage## 2024-12-13 15:14:14.731288 filterData: originally there were 48129895 rows, now there are 48129895 rows. Meaning that 0 percent was filtered.## user system elapsed

## 1.432 0.028 1.461Alternatively, since the BigWig files are publicly available from

BrainSpan (see here),

we can extract the relevant coverage data using

fullCoverage(). Note that as of rtracklayer

1.25.16 BigWig files are not supported on Windows: you can find the

fullCov object inside derfinderData

to follow the examples.

## Determine the files to use and fix the names

files <- pheno$file

names(files) <- gsub(".AMY", "", pheno$lab)

## Load the data from the web

system.time(fullCov <- fullCoverage(

files = files, chrs = "chr21",

totalMapped = rep(1, length(files)), targetSize = 1

))Note how loading the coverage for 12 samples from the web was quite fast. Although in this case we only retained the information for chromosome 21.

The result of fullCov is a list with one element per

chromosome. If no filtering was performed, each chromosome has a

DataFrame with the number of rows equaling the number of

bases in the chromosome with one column per sample.

## Lets explore it

fullCov## $chr21

## DataFrame with 48129895 rows and 12 columns

## HSB113 HSB123 HSB126 HSB130 HSB135 HSB136 HSB145 HSB153 HSB159 HSB178

## <Rle> <Rle> <Rle> <Rle> <Rle> <Rle> <Rle> <Rle> <Rle> <Rle>

## 1 0 0 0 0 0 0 0 0 0 0

## 2 0 0 0 0 0 0 0 0 0 0

## 3 0 0 0 0 0 0 0 0 0 0

## 4 0 0 0 0 0 0 0 0 0 0

## 5 0 0 0 0 0 0 0 0 0 0

## ... ... ... ... ... ... ... ... ... ... ...

## 48129891 0 0 0 0 0 0 0 0 0 0

## 48129892 0 0 0 0 0 0 0 0 0 0

## 48129893 0 0 0 0 0 0 0 0 0 0

## 48129894 0 0 0 0 0 0 0 0 0 0

## 48129895 0 0 0 0 0 0 0 0 0 0

## HSB92 HSB97

## <Rle> <Rle>

## 1 0 0

## 2 0 0

## 3 0 0

## 4 0 0

## 5 0 0

## ... ... ...

## 48129891 0 0

## 48129892 0 0

## 48129893 0 0

## 48129894 0 0

## 48129895 0 0If filtering was performed, each chromosome also has a logical

Rle indicating which bases of the chromosome passed the

filtering. This information is useful later on to map back the results

to the genome coordinates.

Filter coverage

Depending on the use case, you might want to filter the base-level

coverage at the time of reading it, or you might want to keep an

unfiltered version. By default both loadCoverage() and

fullCoverage() will not filter.

If you decide to filter, set the cutoff argument to a

positive value. This will run filterData(). Note that you

might want to standardize the library sizes prior to filtering if you

didn’t already do it when creating the fullCov object. You

can do so by by supplying the totalMapped and

targetSize arguments to filterData(). Note:

don’t use these arguments twice in fullCoverage() and

filterData().

In this example, we prefer to keep both an unfiltered and filtered version. For the filtered version, we will retain the bases where at least one sample has coverage greater than 2.

## Filter coverage

filteredCov <- lapply(fullCov, filterData, cutoff = 2)## 2024-12-13 15:14:15.251428 filterData: originally there were 48129895 rows, now there are 130356 rows. Meaning that 99.73 percent was filtered.The result is similar to fullCov but with the genomic

position index as shown below.

## Similar to fullCov but with $position

filteredCov## $chr21

## $chr21$coverage

## DataFrame with 130356 rows and 12 columns

## HSB113 HSB123 HSB126 HSB130

## <Rle> <Rle> <Rle> <Rle>

## 1 2.00999999046326 0 0 0.0399999991059303

## 2 2.17000007629395 0 0 0.0399999991059303

## 3 2.21000003814697 0 0 0.0399999991059303

## 4 2.36999988555908 0 0 0.0399999991059303

## 5 2.36999988555908 0 0.0599999986588955 0.0399999991059303

## ... ... ... ... ...

## 130352 1.25 1.27999997138977 2.04999995231628 0.790000021457672

## 130353 1.21000003814697 1.24000000953674 2.04999995231628 0.790000021457672

## 130354 1.21000003814697 1.20000004768372 1.92999994754791 0.790000021457672

## 130355 1.16999995708466 1.20000004768372 1.92999994754791 0.790000021457672

## 130356 1.16999995708466 1.0900000333786 1.87000000476837 0.790000021457672

## HSB135 HSB136 HSB145 HSB153

## <Rle> <Rle> <Rle> <Rle>

## 1 0.230000004172325 0.129999995231628 0 0.589999973773956

## 2 0.230000004172325 0.129999995231628 0 0.629999995231628

## 3 0.230000004172325 0.129999995231628 0 0.670000016689301

## 4 0.230000004172325 0.129999995231628 0 0.709999978542328

## 5 0.230000004172325 0.129999995231628 0 0.75

## ... ... ... ... ...

## 130352 1.62999999523163 1.37000000476837 1.02999997138977 2.21000003814697

## 130353 1.62999999523163 1.37000000476837 1.02999997138977 2.21000003814697

## 130354 1.62999999523163 1.27999997138977 0.730000019073486 2.00999999046326

## 130355 1.62999999523163 1.27999997138977 0.600000023841858 2.00999999046326

## 130356 1.54999995231628 1.01999998092651 0.560000002384186 1.92999994754791

## HSB159 HSB178 HSB92 HSB97

## <Rle> <Rle> <Rle> <Rle>

## 1 0.150000005960464 0.0500000007450581 0.0700000002980232 1.58000004291534

## 2 0.150000005960464 0.0500000007450581 0.0700000002980232 1.58000004291534

## 3 0.150000005960464 0.0500000007450581 0.100000001490116 1.61000001430511

## 4 0.150000005960464 0.0500000007450581 0.129999995231628 1.64999997615814

## 5 0.25 0.100000001490116 0.129999995231628 1.67999994754791

## ... ... ... ... ...

## 130352 2.46000003814697 2.0699999332428 2.23000001907349 1.50999999046326

## 130353 2.46000003814697 2.0699999332428 2.23000001907349 1.50999999046326

## 130354 2.21000003814697 2.10999989509583 2.13000011444092 1.47000002861023

## 130355 2.10999989509583 2.10999989509583 2.13000011444092 1.47000002861023

## 130356 1.96000003814697 1.77999997138977 2.05999994277954 1.47000002861023

##

## $chr21$position

## logical-Rle of length 48129895 with 3235 runs

## Lengths: 9825448 149 2 9 ... 172 740 598 45091

## Values : FALSE TRUE FALSE TRUE ... TRUE FALSE TRUE FALSEIn terms of memory, the filtered version requires less resources. Although this will depend on how rich the data set is and how aggressive was the filtering step.

## Compare the size in Mb

round(c(

fullCov = object.size(fullCov),

filteredCov = object.size(filteredCov)

) / 1024^2, 1)## fullCov filteredCov

## 22.7 8.5Note that with your own data, filtering for bases where at least one sample has coverage greater than 2 might not make sense: maybe you need a higher or lower filter. The amount of bases remaining after filtering will impact how long the analysis will take to complete. We thus recommend exploring this before proceeding.

Expressed region-level analysis

Via regionMatrix()

Now that we have the data, we can identify expressed regions (ERs) by using a cutoff of 30 on the base-level mean coverage from these 12 samples. Once the regions have been identified, we can calculate a coverage matrix with one row per ER and one column per sample (12 in this case). For doing this calculation we need to know the length of the sequence reads, which in this study were 76 bp long.

Note that for this type of analysis there is no major benefit of filtering the data. Although it can be done if needed.

## Use regionMatrix()

system.time(regionMat <- regionMatrix(fullCov, cutoff = 30, L = 76))## By using totalMapped equal to targetSize, regionMatrix() assumes that you have normalized the data already in fullCoverage(), loadCoverage() or filterData().## 2024-12-13 15:14:16.205921 regionMatrix: processing chr21## 2024-12-13 15:14:16.206444 filterData: normalizing coverage## 2024-12-13 15:14:16.431282 filterData: done normalizing coverage## 2024-12-13 15:14:18.061592 filterData: originally there were 48129895 rows, now there are 2256 rows. Meaning that 100 percent was filtered.## 2024-12-13 15:14:18.06272 findRegions: identifying potential segments## 2024-12-13 15:14:18.067457 findRegions: segmenting information## 2024-12-13 15:14:18.073177 findRegions: identifying candidate regions## 2024-12-13 15:14:18.106082 findRegions: identifying region clusters## 2024-12-13 15:14:18.239528 getRegionCoverage: processing chr21## 2024-12-13 15:14:18.272355 getRegionCoverage: done processing chr21## 2024-12-13 15:14:18.274566 regionMatrix: calculating coverageMatrix## 2024-12-13 15:14:18.278559 regionMatrix: adjusting coverageMatrix for 'L'## user system elapsed

## 2.004 0.076 2.081

## Explore results

class(regionMat)## [1] "list"

names(regionMat$chr21)## [1] "regions" "coverageMatrix" "bpCoverage"regionMatrix() returns a list of elements of length

equal to the number of chromosomes analyzed. For each chromosome, there

are three pieces of output. The actual ERs are arranged in a

GRanges object named regions.

-

regions is the result from filtering with

filterData()and then defining the regions withfindRegions(). Note that the metadata variablevaluerepresents the mean coverage for the given region whileareais the sum of the base-level coverage (before adjusting for read length) from all samples. -

bpCoverage is a list with the base-level coverage from all

the regions. This information can then be used with

plotRegionCoverage()from derfinderPlot. - coverageMatrix is the matrix with one row per region and one column per sample. The different matrices for each of the chromosomes can then be merged prior to using limma, DESeq2 or other packages. Note that the counts are generally not integers, but can easily be rounded if necessary.

## regions output

regionMat$chr21$regions## GRanges object with 45 ranges and 6 metadata columns:

## seqnames ranges strand | value area indexStart

## <Rle> <IRanges> <Rle> | <numeric> <numeric> <integer>

## 1 chr21 9827018-9827582 * | 313.6717 177224.53 1

## 2 chr21 15457301-15457438 * | 215.0846 29681.68 566

## 3 chr21 20230140-20230192 * | 38.8325 2058.12 704

## 4 chr21 20230445-20230505 * | 41.3245 2520.80 757

## 5 chr21 27253318-27253543 * | 34.9131 7890.37 818

## .. ... ... ... . ... ... ...

## 41 chr21 33039644-33039688 * | 34.4705 1551.1742 2180

## 42 chr21 33040784-33040798 * | 32.1342 482.0133 2225

## 43 chr21 33040890 * | 30.0925 30.0925 2240

## 44 chr21 33040900-33040901 * | 30.1208 60.2417 2241

## 45 chr21 48019401-48019414 * | 31.1489 436.0850 2243

## indexEnd cluster clusterL

## <integer> <Rle> <Rle>

## 1 565 1 565

## 2 703 2 138

## 3 756 3 366

## 4 817 3 366

## 5 1043 4 765

## .. ... ... ...

## 41 2224 17 45

## 42 2239 18 118

## 43 2240 18 118

## 44 2242 18 118

## 45 2256 19 14

## -------

## seqinfo: 1 sequence from an unspecified genome

## Number of regions

length(regionMat$chr21$regions)## [1] 45bpCoverage is the base-level coverage list which can

then be used for plotting.

## Base-level coverage matrices for each of the regions

## Useful for plotting

lapply(regionMat$chr21$bpCoverage[1:2], head, n = 2)## $`1`

## HSB113 HSB123 HSB126 HSB130 HSB135 HSB136 HSB145 HSB153 HSB159 HSB178 HSB92

## 1 93.20 3.32 28.22 5.62 185.17 98.34 5.88 16.71 3.52 15.71 47.40

## 2 124.76 7.25 63.68 11.32 374.85 199.28 10.39 30.53 5.83 29.35 65.04

## HSB97

## 1 36.54

## 2 51.42

##

## $`2`

## HSB113 HSB123 HSB126 HSB130 HSB135 HSB136 HSB145 HSB153 HSB159 HSB178 HSB92

## 566 45.59 7.94 15.92 34.75 141.61 104.21 19.87 38.61 4.97 23.2 13.95

## 567 45.59 7.94 15.92 35.15 141.64 104.30 19.87 38.65 4.97 23.2 13.95

## HSB97

## 566 22.21

## 567 22.21

## Check dimensions. First region is 565 long, second one is 138 bp long.

## The columns match the number of samples (12 in this case).

lapply(regionMat$chr21$bpCoverage[1:2], dim)## $`1`

## [1] 565 12

##

## $`2`

## [1] 138 12The end result of the coverage matrix is shown below. Note that the coverage has been adjusted for read length. Because reads might not fully align inside a given region, the numbers are generally not integers but can be rounded if needed.

## Dimensions of the coverage matrix

dim(regionMat$chr21$coverageMatrix)## [1] 45 12

## Coverage for each region. This matrix can then be used with limma or other pkgs

head(regionMat$chr21$coverageMatrix)## HSB113 HSB123 HSB126 HSB130 HSB135 HSB136

## 1 3653.1093346 277.072106 1397.068687 1106.722895 8987.460401 5570.221054

## 2 333.3740816 99.987237 463.909476 267.354342 1198.713552 1162.313418

## 3 35.3828948 20.153553 30.725394 23.483947 16.786842 17.168947

## 4 42.3398681 29.931579 41.094474 24.724736 32.634080 19.309606

## 5 77.7402631 168.939342 115.059342 171.861974 180.638684 93.503158

## 6 0.7988158 1.770263 1.473421 2.231053 1.697368 1.007895

## HSB145 HSB153 HSB159 HSB178 HSB92 HSB97

## 1 1330.158818 1461.2986829 297.939342 1407.288552 1168.519079 1325.9622371

## 2 257.114210 313.8513139 67.940131 193.695657 127.543553 200.7834228

## 3 22.895921 52.8756585 28.145395 33.127368 23.758816 20.4623685

## 4 33.802632 51.6146040 31.244343 33.576974 29.546183 28.2011836

## 5 90.950526 36.3046051 78.069605 97.151316 100.085790 35.5428946

## 6 1.171316 0.4221053 1.000132 1.139079 1.136447 0.3956579We can then use the coverage matrix and packages such as limma, DESeq2 or edgeR to identify which ERs are differentially expressed.

Find DERs with DESeq2

Here we’ll use DESeq2 to identify the DERs. To use it we need to round the coverage data.

## Loading required package: SummarizedExperiment## Loading required package: MatrixGenerics## Loading required package: matrixStats##

## Attaching package: 'MatrixGenerics'## The following objects are masked from 'package:matrixStats':

##

## colAlls, colAnyNAs, colAnys, colAvgsPerRowSet, colCollapse,

## colCounts, colCummaxs, colCummins, colCumprods, colCumsums,

## colDiffs, colIQRDiffs, colIQRs, colLogSumExps, colMadDiffs,

## colMads, colMaxs, colMeans2, colMedians, colMins, colOrderStats,

## colProds, colQuantiles, colRanges, colRanks, colSdDiffs, colSds,

## colSums2, colTabulates, colVarDiffs, colVars, colWeightedMads,

## colWeightedMeans, colWeightedMedians, colWeightedSds,

## colWeightedVars, rowAlls, rowAnyNAs, rowAnys, rowAvgsPerColSet,

## rowCollapse, rowCounts, rowCummaxs, rowCummins, rowCumprods,

## rowCumsums, rowDiffs, rowIQRDiffs, rowIQRs, rowLogSumExps,

## rowMadDiffs, rowMads, rowMaxs, rowMeans2, rowMedians, rowMins,

## rowOrderStats, rowProds, rowQuantiles, rowRanges, rowRanks,

## rowSdDiffs, rowSds, rowSums2, rowTabulates, rowVarDiffs, rowVars,

## rowWeightedMads, rowWeightedMeans, rowWeightedMedians,

## rowWeightedSds, rowWeightedVars## Loading required package: Biobase## Welcome to Bioconductor

##

## Vignettes contain introductory material; view with

## 'browseVignettes()'. To cite Bioconductor, see

## 'citation("Biobase")', and for packages 'citation("pkgname")'.##

## Attaching package: 'Biobase'## The following object is masked from 'package:MatrixGenerics':

##

## rowMedians## The following objects are masked from 'package:matrixStats':

##

## anyMissing, rowMedians

## Round matrix

counts <- round(regionMat$chr21$coverageMatrix)

## Round matrix and specify design

dse <- DESeqDataSetFromMatrix(counts, pheno, ~ group + gender)## converting counts to integer mode

## Perform DE analysis

dse <- DESeq(dse, test = "LRT", reduced = ~gender, fitType = "local")## estimating size factors## estimating dispersions## gene-wise dispersion estimates## mean-dispersion relationship## final dispersion estimates## fitting model and testing

## Extract results

deseq <- regionMat$chr21$regions

mcols(deseq) <- c(mcols(deseq), results(dse))

## Explore the results

deseq## GRanges object with 45 ranges and 12 metadata columns:

## seqnames ranges strand | value area indexStart

## <Rle> <IRanges> <Rle> | <numeric> <numeric> <integer>

## 1 chr21 9827018-9827582 * | 313.6717 177224.53 1

## 2 chr21 15457301-15457438 * | 215.0846 29681.68 566

## 3 chr21 20230140-20230192 * | 38.8325 2058.12 704

## 4 chr21 20230445-20230505 * | 41.3245 2520.80 757

## 5 chr21 27253318-27253543 * | 34.9131 7890.37 818

## .. ... ... ... . ... ... ...

## 41 chr21 33039644-33039688 * | 34.4705 1551.1742 2180

## 42 chr21 33040784-33040798 * | 32.1342 482.0133 2225

## 43 chr21 33040890 * | 30.0925 30.0925 2240

## 44 chr21 33040900-33040901 * | 30.1208 60.2417 2241

## 45 chr21 48019401-48019414 * | 31.1489 436.0850 2243

## indexEnd cluster clusterL baseMean log2FoldChange lfcSE stat

## <integer> <Rle> <Rle> <numeric> <numeric> <numeric> <numeric>

## 1 565 1 565 2846.2872 -1.6903182 0.831959 0.215262

## 2 703 2 138 451.5196 -1.1640426 0.757490 0.871126

## 3 756 3 366 29.5781 0.0461488 0.458097 3.132082

## 4 817 3 366 36.0603 -0.1866200 0.390920 2.225708

## 5 1043 4 765 101.6468 -0.1387377 0.320166 3.957987

## .. ... ... ... ... ... ... ...

## 41 2224 17 45 20.782035 -0.642056 0.427661 0.6047814

## 42 2239 18 118 6.410542 -0.634321 0.512262 0.5454039

## 43 2240 18 118 0.129717 -0.859549 3.116540 0.0206273

## 44 2242 18 118 0.702291 -0.628285 2.247378 0.5825105

## 45 2256 19 14 5.293293 -1.694563 1.252290 5.7895910

## pvalue padj

## <numeric> <numeric>

## 1 0.6426743 0.997155

## 2 0.3506436 0.997155

## 3 0.0767657 0.863614

## 4 0.1357305 0.997155

## 5 0.0466495 0.862040

## .. ... ...

## 41 0.4367595 0.997155

## 42 0.4602018 0.997155

## 43 0.8857989 0.997155

## 44 0.4453299 0.997155

## 45 0.0161213 0.725460

## -------

## seqinfo: 1 sequence from an unspecified genomeYou can get similar results using edgeR using

these functions: DGEList(), calcNormFactors(),

estimateGLMRobustDisp(), glmFit(), and

glmLRT().

Find DERs with limma

Alternatively, we can find DERs using limma. Here we’ll exemplify a type of test closer to what we’ll do later with the F-statistics approach. First of all, we need to define our models.

## Build models

mod <- model.matrix(~ pheno$group + pheno$gender)

mod0 <- model.matrix(~ pheno$gender)Next, we’ll transform the coverage information using the same default

transformation from analyzeChr().

## Transform coverage

transformedCov <- log2(regionMat$chr21$coverageMatrix + 32)We can then fit the models and get the F-statistic p-values and control the FDR.

##

## Attaching package: 'limma'## The following object is masked from 'package:DESeq2':

##

## plotMA## The following object is masked from 'package:BiocGenerics':

##

## plotMA

## Run limma

fit <- lmFit(transformedCov, mod)

fit0 <- lmFit(transformedCov, mod0)

## Determine DE status for the regions

## Also in https://github.com/LieberInstitute/jaffelab with help and examples

getF <- function(fit, fit0, theData) {

rss1 <- rowSums((fitted(fit) - theData)^2)

df1 <- ncol(fit$coef)

rss0 <- rowSums((fitted(fit0) - theData)^2)

df0 <- ncol(fit0$coef)

fstat <- ((rss0 - rss1) / (df1 - df0)) / (rss1 / (ncol(theData) - df1))

f_pval <- pf(fstat, df1 - df0, ncol(theData) - df1, lower.tail = FALSE)

fout <- cbind(fstat, df1 - 1, ncol(theData) - df1, f_pval)

colnames(fout)[2:3] <- c("df1", "df0")

fout <- data.frame(fout)

return(fout)

}

ff <- getF(fit, fit0, transformedCov)

## Get the p-value and assign it to the regions

limma <- regionMat$chr21$regions

limma$fstat <- ff$fstat

limma$pvalue <- ff$f_pval

limma$padj <- p.adjust(ff$f_pval, "BH")

## Explore the results

limma## GRanges object with 45 ranges and 9 metadata columns:

## seqnames ranges strand | value area indexStart

## <Rle> <IRanges> <Rle> | <numeric> <numeric> <integer>

## 1 chr21 9827018-9827582 * | 313.6717 177224.53 1

## 2 chr21 15457301-15457438 * | 215.0846 29681.68 566

## 3 chr21 20230140-20230192 * | 38.8325 2058.12 704

## 4 chr21 20230445-20230505 * | 41.3245 2520.80 757

## 5 chr21 27253318-27253543 * | 34.9131 7890.37 818

## .. ... ... ... . ... ... ...

## 41 chr21 33039644-33039688 * | 34.4705 1551.1742 2180

## 42 chr21 33040784-33040798 * | 32.1342 482.0133 2225

## 43 chr21 33040890 * | 30.0925 30.0925 2240

## 44 chr21 33040900-33040901 * | 30.1208 60.2417 2241

## 45 chr21 48019401-48019414 * | 31.1489 436.0850 2243

## indexEnd cluster clusterL fstat pvalue padj

## <integer> <Rle> <Rle> <numeric> <numeric> <numeric>

## 1 565 1 565 1.638455 0.2325446 0.581362

## 2 703 2 138 4.307443 0.0677644 0.324601

## 3 756 3 366 1.323342 0.2796406 0.629191

## 4 817 3 366 0.380332 0.5527044 0.863074

## 5 1043 4 765 7.249519 0.0246955 0.309532

## .. ... ... ... ... ... ...

## 41 2224 17 45 3.11799 0.1112440 0.385075

## 42 2239 18 118 3.66184 0.0879543 0.329829

## 43 2240 18 118 3.87860 0.0804175 0.328981

## 44 2242 18 118 4.39338 0.0655381 0.324601

## 45 2256 19 14 6.80915 0.0282970 0.309532

## -------

## seqinfo: 1 sequence from an unspecified genomeIn this simple example, none of the ERs have strong differential expression signal when adjusting for an FDR of 5%.

table(limma$padj < 0.05, deseq$padj < 0.05)##

## FALSE

## FALSE 45Via railMatrix()

If you have Rail output, you can get

the same results faster than with regionMatrix(). Rail will create the summarized coverage

BigWig file for you, but we are not including it in this package due to

its size. So, lets create it.

## Calculate the mean: this step takes a long time with many samples

meanCov <- Reduce("+", fullCov$chr21) / ncol(fullCov$chr21)

## Save it on a bigwig file called meanChr21.bw

createBw(list("chr21" = DataFrame("meanChr21" = meanCov)),

keepGR =

FALSE

)## 2024-12-13 15:14:21.373129 coerceGR: coercing sample meanChr21## 2024-12-13 15:14:21.467931 createBwSample: exporting bw for sample meanChr21Now that we have the files Rail creates

for us, we can use railMatrix().

## Identify files to use

summaryFile <- "meanChr21.bw"

## We had already found the sample BigWig files and saved it in the object 'files'

## Lets just rename it to sampleFiles for clarity.

sampleFiles <- files

## Get the regions

system.time(

regionMat.rail <- railMatrix(

chrs = "chr21", summaryFiles = summaryFile,

sampleFiles = sampleFiles, L = 76, cutoff = 30, maxClusterGap = 3000L

)

)## 2024-12-13 15:14:23.823365 loadCoverage: finding chromosome lengths## 2024-12-13 15:14:23.829149 loadCoverage: loading BigWig file meanChr21.bw## 2024-12-13 15:14:24.111509 loadCoverage: applying the cutoff to the merged data## 2024-12-13 15:14:25.200279 filterData: originally there were 48129895 rows, now there are 48129895 rows. Meaning that 0 percent was filtered.## 2024-12-13 15:14:25.411678 filterData: originally there were 48129895 rows, now there are 2256 rows. Meaning that 100 percent was filtered.## 2024-12-13 15:14:25.413871 findRegions: identifying potential segments## 2024-12-13 15:14:25.416119 findRegions: segmenting information## 2024-12-13 15:14:25.416469 .getSegmentsRle: segmenting with cutoff(s) 30## 2024-12-13 15:14:25.421773 findRegions: identifying candidate regions## 2024-12-13 15:14:25.459362 findRegions: identifying region clusters## 2024-12-13 15:14:25.499135 railMatrix: processing regions 1 to 45## 2024-12-13 15:14:25.503141 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB113.bw## 2024-12-13 15:14:25.547518 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB123.bw## 2024-12-13 15:14:25.592582 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB126.bw## 2024-12-13 15:14:25.6365 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB130.bw## 2024-12-13 15:14:25.679987 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB135.bw## 2024-12-13 15:14:25.723813 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB136.bw## 2024-12-13 15:14:25.768189 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB145.bw## 2024-12-13 15:14:25.813215 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB153.bw## 2024-12-13 15:14:25.858534 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB159.bw## 2024-12-13 15:14:25.903878 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB178.bw## 2024-12-13 15:14:25.948801 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB92.bw## 2024-12-13 15:14:25.994235 railMatrix: processing file /__w/_temp/Library/derfinderData/extdata/AMY/HSB97.bw## user system elapsed

## 2.196 0.032 2.229When you take into account the time needed to load the data

(fullCoverage()) and then creating the matrix

(regionMatrix()), railMatrix() is faster and

less memory intensive.

The objects are not identical due to small rounding errors, but it’s nothing to worry about.

## Overall not identical due to small rounding errors

identical(regionMat, regionMat.rail)## [1] FALSE

## Actual regions are the same

identical(ranges(regionMat$chr21$regions), ranges(regionMat.rail$chr21$regions))## [1] TRUE

## When you round, the small differences go away

identical(

round(regionMat$chr21$regions$value, 4),

round(regionMat.rail$chr21$regions$value, 4)

)## [1] TRUE## [1] TRUESingle base-level F-statistics analysis

One form of base-level differential expression analysis implemented in derfinder is to calculate F-statistics for every base and use them to define candidate differentially expressed regions. This type of analysis is further explained in this section.

Models

Once we have the base-level coverage data for all 12 samples, we can construct the models. In this case, we want to find differences between fetal and adult samples while adjusting for gender and a proxy of the library size.

We can use sampleDepth() and it’s helper function

collapseFullCoverage() to get a proxy of the library size.

Note that you would normally use the unfiltered data from all the

chromosomes in this step and not just one.

## Get some idea of the library sizes

sampleDepths <- sampleDepth(collapseFullCoverage(fullCov), 1)## 2024-12-13 15:14:26.1838 sampleDepth: Calculating sample quantiles## 2024-12-13 15:14:26.276532 sampleDepth: Calculating sample adjustments

sampleDepths## HSB113.100% HSB123.100% HSB126.100% HSB130.100% HSB135.100% HSB136.100%

## 19.82106 19.40505 19.53045 19.52017 20.33392 19.97758

## HSB145.100% HSB153.100% HSB159.100% HSB178.100% HSB92.100% HSB97.100%

## 19.49827 19.41285 19.24186 19.44252 19.55904 19.47733sampleDepth() is similar to

calcNormFactors() from metagenomeSeq

with some code underneath tailored for the type of data we are using.

collapseFullCoverage() is only needed to deal with the size

of the data.

We can then define the nested models we want to use using

makeModels(). This is a helper function that assumes that

you will always adjust for the library size. You then need to define the

variable to test, in this case we are comparing fetal vs adult samples.

Optionally, you can adjust for other sample covariates, such as the

gender in this case.

## Define models

models <- makeModels(sampleDepths,

testvars = pheno$group,

adjustvars = pheno[, c("gender")]

)

## Explore the models

lapply(models, head)## $mod

## (Intercept) testvarsadult sampleDepths adjustVar1M

## 1 1 0 19.82106 0

## 2 1 0 19.40505 1

## 3 1 0 19.53045 1

## 4 1 0 19.52017 1

## 5 1 0 20.33392 0

## 6 1 0 19.97758 0

##

## $mod0

## (Intercept) sampleDepths adjustVar1M

## 1 1 19.82106 0

## 2 1 19.40505 1

## 3 1 19.53045 1

## 4 1 19.52017 1

## 5 1 20.33392 0

## 6 1 19.97758 0Note how the null model (mod0) is nested in the

alternative model (mod). Use the same models for all your

chromosomes unless you have a specific reason to use chromosome-specific

models. Note that derfinder

is very flexible and works with any type of nested model.

Find candidate DERs

Next, we can find candidate differentially expressed regions (DERs) using as input the segments of the genome where at least one sample has coverage greater than 2. That is, the filtered coverage version we created previously.

The main function in derfinder

for this type of analysis is analyzeChr(). It works at a

chromosome level and runs behinds the scenes several other derfinder

functions. To use it, you have to provide the models, the grouping

information, how to calculate the F-statistic cutoff and most

importantly, the number of permutations.

By default analyzeChr() will use a theoretical cutoff.

In this example, we use the cutoff that would correspond to a p-value of

0.05. To assign p-values to the candidate DERs, derfinder

permutes the rows of the model matrices, re-calculates the F-statistics

and identifies null regions. Then it compares the area of the observed

regions versus the areas from the null regions to assign an empirical

p-value.

In this example we will use twenty permutations, although in a real case scenario you might consider a larger number of permutations.

In real scenario, you might consider saving the results from all the chromosomes in a given directory. Here we will use analysisResults. For each chromosome you analyze, a new directory with the chromosome-specific data will be created. So in this case, we will have analysisResults/chr21.

## Create a analysis directory

dir.create("analysisResults")

originalWd <- getwd()

setwd(file.path(originalWd, "analysisResults"))

## Perform differential expression analysis

system.time(results <- analyzeChr(

chr = "chr21", filteredCov$chr21, models,

groupInfo = pheno$group, writeOutput = TRUE, cutoffFstat = 5e-02,

nPermute = 20, seeds = 20140923 + seq_len(20), returnOutput = TRUE

))## 2024-12-13 15:14:27.271576 analyzeChr: Pre-processing the coverage data## 2024-12-13 15:14:29.167139 analyzeChr: Calculating statistics## 2024-12-13 15:14:29.16957 calculateStats: calculating the F-statistics## 2024-12-13 15:14:29.342532 analyzeChr: Calculating pvalues## 2024-12-13 15:14:29.343051 analyzeChr: Using the following theoretical cutoff for the F-statistics 5.31765507157871## 2024-12-13 15:14:29.343892 calculatePvalues: identifying data segments## 2024-12-13 15:14:29.34643 findRegions: segmenting information## 2024-12-13 15:14:29.366982 findRegions: identifying candidate regions## 2024-12-13 15:14:29.393081 findRegions: identifying region clusters## 2024-12-13 15:14:29.49669 calculatePvalues: calculating F-statistics for permutation 1 and seed 20140924## 2024-12-13 15:14:29.625074 findRegions: segmenting information## 2024-12-13 15:14:29.649201 findRegions: identifying candidate regions## 2024-12-13 15:14:29.678529 calculatePvalues: calculating F-statistics for permutation 2 and seed 20140925## 2024-12-13 15:14:29.80353 findRegions: segmenting information## 2024-12-13 15:14:29.827741 findRegions: identifying candidate regions## 2024-12-13 15:14:29.856591 calculatePvalues: calculating F-statistics for permutation 3 and seed 20140926## 2024-12-13 15:14:29.971267 findRegions: segmenting information## 2024-12-13 15:14:29.992923 findRegions: identifying candidate regions## 2024-12-13 15:14:30.022065 calculatePvalues: calculating F-statistics for permutation 4 and seed 20140927## 2024-12-13 15:14:30.145112 findRegions: segmenting information## 2024-12-13 15:14:30.167778 findRegions: identifying candidate regions## 2024-12-13 15:14:30.197581 calculatePvalues: calculating F-statistics for permutation 5 and seed 20140928## 2024-12-13 15:14:30.312506 findRegions: segmenting information## 2024-12-13 15:14:30.348665 findRegions: identifying candidate regions## 2024-12-13 15:14:30.3777 calculatePvalues: calculating F-statistics for permutation 6 and seed 20140929## 2024-12-13 15:14:30.492855 findRegions: segmenting information## 2024-12-13 15:14:30.514766 findRegions: identifying candidate regions## 2024-12-13 15:14:30.544214 calculatePvalues: calculating F-statistics for permutation 7 and seed 20140930## 2024-12-13 15:14:30.666399 findRegions: segmenting information## 2024-12-13 15:14:30.687197 findRegions: identifying candidate regions## 2024-12-13 15:14:30.716381 calculatePvalues: calculating F-statistics for permutation 8 and seed 20140931## 2024-12-13 15:14:30.842429 findRegions: segmenting information## 2024-12-13 15:14:30.864947 findRegions: identifying candidate regions## 2024-12-13 15:14:30.894309 calculatePvalues: calculating F-statistics for permutation 9 and seed 20140932## 2024-12-13 15:14:31.010431 findRegions: segmenting information## 2024-12-13 15:14:31.033069 findRegions: identifying candidate regions## 2024-12-13 15:14:31.063354 calculatePvalues: calculating F-statistics for permutation 10 and seed 20140933## 2024-12-13 15:14:31.186974 findRegions: segmenting information## 2024-12-13 15:14:31.211543 findRegions: identifying candidate regions## 2024-12-13 15:14:31.242975 calculatePvalues: calculating F-statistics for permutation 11 and seed 20140934## 2024-12-13 15:14:31.35956 findRegions: segmenting information## 2024-12-13 15:14:31.390056 findRegions: identifying candidate regions## 2024-12-13 15:14:31.419316 calculatePvalues: calculating F-statistics for permutation 12 and seed 20140935## 2024-12-13 15:14:31.534753 findRegions: segmenting information## 2024-12-13 15:14:31.558415 findRegions: identifying candidate regions## 2024-12-13 15:14:31.589252 calculatePvalues: calculating F-statistics for permutation 13 and seed 20140936## 2024-12-13 15:14:31.712761 findRegions: segmenting information## 2024-12-13 15:14:31.736979 findRegions: identifying candidate regions## 2024-12-13 15:14:31.765638 calculatePvalues: calculating F-statistics for permutation 14 and seed 20140937## 2024-12-13 15:14:31.889913 findRegions: segmenting information## 2024-12-13 15:14:32.657995 findRegions: identifying candidate regions## 2024-12-13 15:14:32.68806 calculatePvalues: calculating F-statistics for permutation 15 and seed 20140938## 2024-12-13 15:14:32.802856 findRegions: segmenting information## 2024-12-13 15:14:32.82517 findRegions: identifying candidate regions## 2024-12-13 15:14:32.854095 calculatePvalues: calculating F-statistics for permutation 16 and seed 20140939## 2024-12-13 15:14:32.975982 findRegions: segmenting information## 2024-12-13 15:14:32.996601 findRegions: identifying candidate regions## 2024-12-13 15:14:33.025195 calculatePvalues: calculating F-statistics for permutation 17 and seed 20140940## 2024-12-13 15:14:33.150832 findRegions: segmenting information## 2024-12-13 15:14:33.175271 findRegions: identifying candidate regions## 2024-12-13 15:14:33.204966 calculatePvalues: calculating F-statistics for permutation 18 and seed 20140941## 2024-12-13 15:14:33.319455 findRegions: segmenting information## 2024-12-13 15:14:33.341873 findRegions: identifying candidate regions## 2024-12-13 15:14:33.371071 calculatePvalues: calculating F-statistics for permutation 19 and seed 20140942## 2024-12-13 15:14:33.492903 findRegions: segmenting information## 2024-12-13 15:14:33.514383 findRegions: identifying candidate regions## 2024-12-13 15:14:33.543266 calculatePvalues: calculating F-statistics for permutation 20 and seed 20140943## 2024-12-13 15:14:33.659283 findRegions: segmenting information## 2024-12-13 15:14:33.689104 findRegions: identifying candidate regions## 2024-12-13 15:14:33.728269 calculatePvalues: calculating the p-values## 2024-12-13 15:14:33.776876 analyzeChr: Annotating regions## No annotationPackage supplied. Trying org.Hs.eg.db.## Loading required package: org.Hs.eg.db## Warning in library(package, lib.loc = lib.loc, character.only = TRUE,

## logical.return = TRUE, : there is no package called 'org.Hs.eg.db'## Could not load org.Hs.eg.db. Will continue without annotation## Getting TSS and TSE.## Getting CSS and CSE.## Getting exons.## .....## user system elapsed

## 29.474 2.392 29.263To speed up analyzeChr(), you might need to use several

cores via the mc.cores argument. If memory is limiting, you

might want to use a smaller chunksize (default is 5

million). Note that if you use too many cores, you might hit the

input/output ceiling of your data network and/or hard drives speed.

Before running with a large number of permutations we recommend exploring how long each permutation cycle takes using a single permutation.

Note that analyzing each chromosome with a large number of

permutations and a rich data set can take several hours, so we recommend

running one job running analyzeChr() per chromosome, and

then merging the results via mergeResults(). This process

is further described in the advanced derfinder

vignette.

Explore results

When using returnOutput = TRUE,

analyzeChr() will return a list with the results to explore

interactively. However, by default it writes the results to disk (one

.Rdata file per result).

The following code explores the results.

## Explore

names(results)## [1] "timeinfo" "optionsStats" "coveragePrep" "fstats" "regions"

## [6] "annotation"optionStats

optionStats stores the main options used in the

analyzeChr() call including the models used, the type of

cutoff, number of permutations, seeds for the permutations. All this

information can be useful to reproduce the analysis.

## Explore optionsStats

names(results$optionsStats)## [1] "models" "cutoffPre" "scalefac" "chunksize"

## [5] "cutoffFstat" "cutoffType" "nPermute" "seeds"

## [9] "groupInfo" "lowMemDir" "analyzeCall" "cutoffFstatUsed"

## [13] "smooth" "smoothFunction" "weights" "returnOutput"

## Call used

results$optionsStats$analyzeCall## analyzeChr(chr = "chr21", coverageInfo = filteredCov$chr21, models = models,

## cutoffFstat = 0.05, nPermute = 20, seeds = 20140923 + seq_len(20),

## groupInfo = pheno$group, writeOutput = TRUE, returnOutput = TRUE)coveragePrep

coveragePrep has the result from the

preprocessCoverage() step. This includes the genomic

position index, the mean coverage (after scaling and the log2

transformation) for all the samples, and the group mean coverages. By

default, the chunks are written to disk in

optionsStats$lowMemDir (chr21/chunksDir in this example) to

help reduce the required memory resources. Otherwise it is stored in

coveragePrep$coverageProcessed.

## Explore coveragePrep

names(results$coveragePrep)## [1] "coverageProcessed" "mclapplyIndex" "position"

## [4] "meanCoverage" "groupMeans"

## Group means

results$coveragePrep$groupMeans## $fetal

## numeric-Rle of length 130356 with 116452 runs

## Lengths: 1 1 1 1 ... 1 1 1

## Values : 0.401667 0.428333 0.435000 0.461667 ... 1.34000 1.33333 1.24833

##

## $adult

## numeric-Rle of length 130356 with 119226 runs

## Lengths: 1 1 1 1 ... 1 1 1

## Values : 0.406667 0.413333 0.430000 0.448333 ... 1.77667 1.73833 1.62667fstats

The F-statistics are then stored in fstats. These are

calculated using calculateStats().

## Explore optionsStats

results$fstats## numeric-Rle of length 130356 with 126807 runs

## Lengths: 1 1 1 ... 1 1

## Values : 0.01922610 0.02996937 0.02066332 ... 2.507611 2.324638

## Note that the length matches the number of bases used

identical(length(results$fstats), sum(results$coveragePrep$position))## [1] TRUEregions

The candidate DERs and summary results from the permutations is then

stored in regions. This is the output from

calculatePvalues() which uses several underneath other

functions including calculateStats() and

findRegions().

## Explore regions

names(results$regions)## [1] "regions" "nullStats" "nullWidths" "nullPermutation"For the null regions, the summary information is composed of the mean

F-statistic for the null regions (regions$nullStats), the

width of the null regions (regions$nullWidths), and the

permutation number under which they were identified

(regions$nullPermutation).

## Permutation summary information

results$regions[2:4]## $nullStats

## numeric-Rle of length 13994 with 13994 runs

## Lengths: 1 1 1 1 ... 1 1 1

## Values : 5.43461 5.71738 6.37821 6.33171 ... 5.35554 5.36614 5.62516

##

## $nullWidths

## integer-Rle of length 13994 with 12365 runs

## Lengths: 2 1 1 1 1 1 3 1 ... 1 1 1 2 1 1 4 1

## Values : 1 24 7 1 32 2 1 11 ... 1 3 45 4 28 6 1 2

##

## $nullPermutation

## integer-Rle of length 13994 with 20 runs

## Lengths: 246 350 574 554 396 462 482 ... 746 114 1460 428 802 278

## Values : 1 2 3 4 5 6 7 ... 15 16 17 18 19 20The most important part of the output is the GRanges

object with the candidate DERs shown below.

## Candidate DERs

results$regions$regions## GRanges object with 591 ranges and 14 metadata columns:

## seqnames ranges strand | value area indexStart

## <Rle> <IRanges> <Rle> | <numeric> <numeric> <integer>

## up chr21 47610386-47610682 * | 11.10304 3297.60 122158

## up chr21 40196145-40196444 * | 10.06142 3018.43 76110

## up chr21 27253616-27253948 * | 8.43488 2808.82 22019

## up chr21 22115534-22115894 * | 7.23645 2612.36 12274

## up chr21 22914853-22915064 * | 9.78066 2073.50 17318

## .. ... ... ... . ... ... ...

## up chr21 35889784 * | 5.31952 5.31952 60088

## up chr21 47610093 * | 5.31912 5.31912 121865

## up chr21 16333728 * | 5.31881 5.31881 5048

## up chr21 34001896 * | 5.31871 5.31871 32577

## up chr21 34809571 * | 5.31801 5.31801 43694

## indexEnd cluster clusterL meanCoverage meanfetal meanadult

## <integer> <Rle> <Rle> <numeric> <numeric> <numeric>

## up 122454 138 933 1.597952 0.82289 2.373013

## up 76409 71 1323 1.303508 2.02532 0.581694

## up 22351 28 407 33.657858 42.46704 24.848674

## up 12634 9 694 0.964464 1.71906 0.209871

## up 17529 21 217 2.838978 4.23593 1.442028

## .. ... ... ... ... ... ...

## up 60088 51 742 2.75417 3.36000 2.14833

## up 121865 138 933 1.45583 0.77500 2.13667

## up 5048 1 9 1.19500 1.23167 1.15833

## up 32577 38 1428 1.71250 2.33333 1.09167

## up 43694 46 149 2.95000 2.87833 3.02167

## log2FoldChangeadultvsfetal pvalues significant qvalues

## <numeric> <numeric> <factor> <numeric>

## up 1.527949 0.00278671 TRUE 0.738407

## up -1.799818 0.00378707 TRUE 0.738407

## up -0.773175 0.00464452 TRUE 0.738407

## up -3.034045 0.00535906 TRUE 0.738407

## up -1.554578 0.00793140 TRUE 0.738407

## .. ... ... ... ...

## up -0.6452433 0.997856 FALSE 0.974463

## up 1.4630937 0.998285 FALSE 0.974463

## up -0.0885613 0.998714 FALSE 0.974463

## up -1.0958600 0.998714 FALSE 0.974463

## up 0.0701108 0.999571 FALSE 0.974463

## significantQval

## <factor>

## up FALSE

## up FALSE

## up FALSE

## up FALSE

## up FALSE

## .. ...

## up FALSE

## up FALSE

## up FALSE

## up FALSE

## up FALSE

## -------

## seqinfo: 1 sequence from an unspecified genome; no seqlengthsThe metadata columns are:

- value is the mean F-statistics for the candidate DER.

- area is the sum of the F-statistics for the candidate DER.

- indexStart Relates the genomic start coordinate with the filtered genomic index start coordinate.

- indexEnd Similarly as above but for the end coordinates.

- cluster The cluster id to which this candidate DER belongs to.

- clusterL The length of the cluster to which this candidate DER belongs to.

- meanCoverage The base level mean coverage for the candidate DER.

- meanfetal In this example, the mean coverage for the fetal samples.

- meanadult In this example, the mean coverage for the adult samples.

- log2FoldChangeadultvsfetal In this example, the log2 fold change between adult vs fetal samples.

- pvalues The p-value for the candidate DER.

- significant By default, whether the p-value is less than 0.05 or not.

- qvalues The q-value for the candidate DER calculated with qvalue.

- significantQval By default, whether the q-value is less than 0.10 or not.

Note that for this type of analysis you might want to try a few coverage cutoffs and/or F-statistic cutoffs. One quick way to evaluate the results is to compare the width of the regions.

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 1.00 1.00 4.00 17.98 17.00 361.00## [1] 68

## Width of candidate DERs

sig <- as.logical(results$regions$regions$significant)

summary(width(results$regions$regions[sig]))## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 65.0 81.5 97.0 127.8 122.0 361.0## [1] 35Nearest annotation

analyzeChr() will find the nearest annotation feature

using matchGenes() from bumphunter

(version >= 1.7.3). This information is useful considering that the

candidate DERs were identified without relying on annotation. Yet at the

end, we are interested to check if they are inside a known exon,

upstream a gene, etc.

## Nearest annotation

head(results$annotation)## name annotation description region distance subregion insideDistance

## 1 <NA> <NA> inside exon inside 38056 inside exon 0

## 2 <NA> <NA> inside exon inside 18390 inside exon 0

## 3 <NA> <NA> inside exon inside 289498 inside exon 0

## 4 <NA> <NA> inside exon inside 59532 inside exon 0

## 5 <NA> <NA> downstream downstream 544220 <NA> NA

## 6 <NA> <NA> inside exon inside 59315 inside exon 0

## exonnumber nexons UTR strand geneL codingL Geneid

## 1 22 22 3'UTR - 39700 NA 4047

## 2 10 10 3'UTR + 19123 NA 2114

## 3 16 16 3'UTR - 290585 NA 351

## 4 7 7 inside transcription region - 60513 NA 387486

## 5 NA 15 <NA> + 541884 NA 4685

## 6 7 7 inside transcription region - 60513 NA 387486

## subjectHits

## 1 34356

## 2 17340

## 3 31161

## 4 32699

## 5 37133

## 6 32699For more details on the output please check the bumphunter package.

Check the section about non-human data (specifically, using annotation different from hg19) on the advanced vignette.

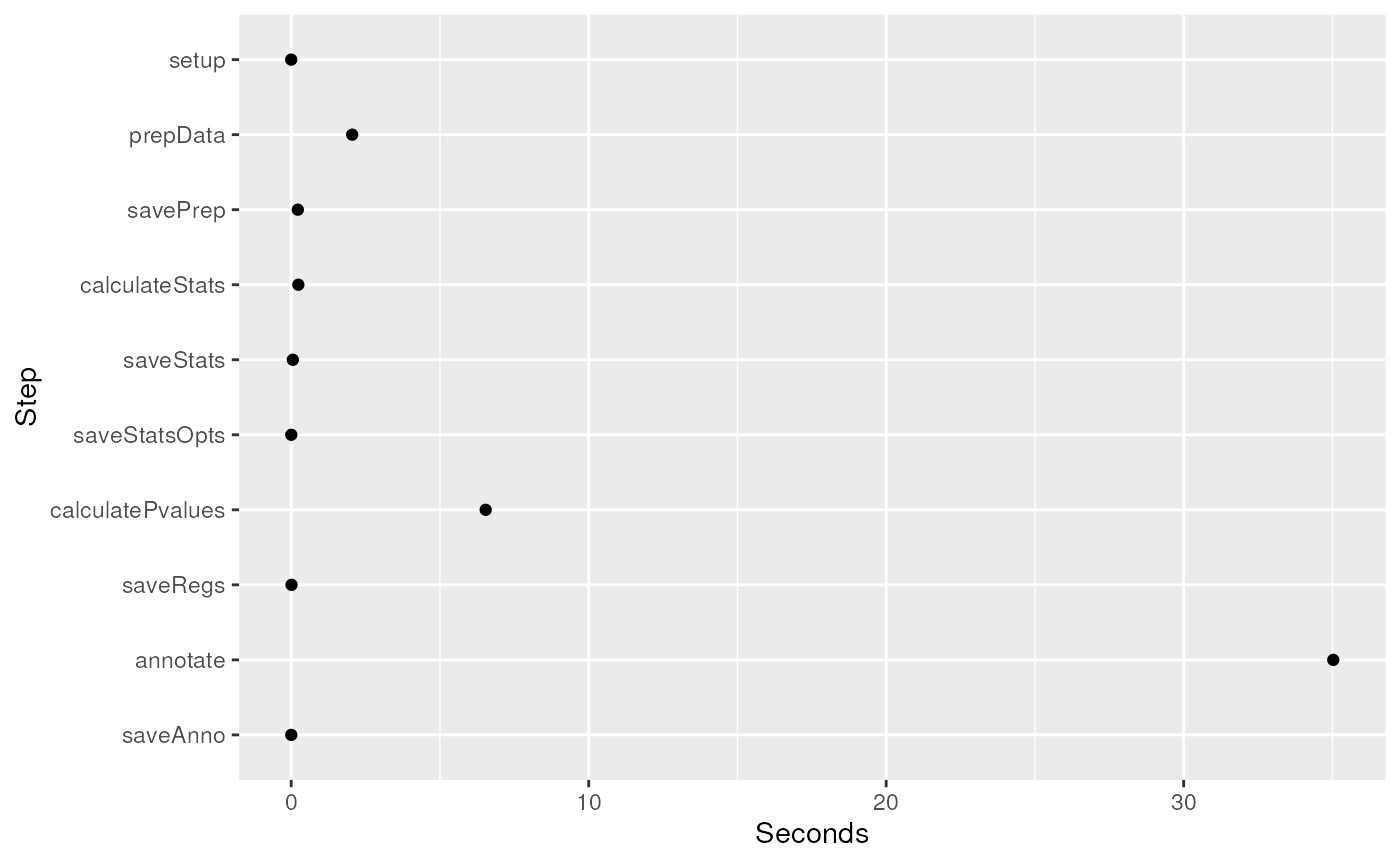

Time spent

The final piece is the wallclock time spent during each of the steps

in analyzeChr(). You can then make a plot with this

information as shown in Figure @ref(fig:exploreTime).

## Time spent

results$timeinfo## init setup prepData

## "2024-12-13 15:14:27 UTC" "2024-12-13 15:14:27 UTC" "2024-12-13 15:14:28 UTC"

## savePrep calculateStats saveStats

## "2024-12-13 15:14:29 UTC" "2024-12-13 15:14:29 UTC" "2024-12-13 15:14:29 UTC"

## saveStatsOpts calculatePvalues saveRegs

## "2024-12-13 15:14:29 UTC" "2024-12-13 15:14:33 UTC" "2024-12-13 15:14:33 UTC"

## annotate saveAnno

## "2024-12-13 15:14:56 UTC" "2024-12-13 15:14:56 UTC"

## Use this information to make a plot

timed <- diff(results$timeinfo)

timed.df <- data.frame(Seconds = as.numeric(timed), Step = factor(names(timed),

levels = rev(names(timed))

))

library("ggplot2")

ggplot(timed.df, aes(y = Step, x = Seconds)) +

geom_point()

Seconds used to run each step in analyzeChr().

Merge results

Once you have analyzed each chromosome using

analyzeChr(), you can use mergeResults() to

merge the results. This function does not return an object in R but

instead creates several Rdata files with the main results from the

different chromosomes.

## Go back to the original directory

setwd(originalWd)

## Merge results from several chromosomes. In this case we only have one.

mergeResults(

chrs = "chr21", prefix = "analysisResults",

genomicState = genomicState$fullGenome,

optionsStats = results$optionsStats

)## 2024-12-13 15:14:57.528686 mergeResults: Saving options used## 2024-12-13 15:14:57.530462 Loading chromosome chr21## 2024-12-13 15:14:57.576165 mergeResults: calculating FWER## 2024-12-13 15:14:57.600286 mergeResults: Saving fullNullSummary## 2024-12-13 15:14:57.608068 mergeResults: Re-calculating the p-values## 2024-12-13 15:14:57.653293 mergeResults: Saving fullRegions## 2024-12-13 15:14:57.658512 mergeResults: assigning genomic states## 2024-12-13 15:14:57.725334 annotateRegions: counting## 2024-12-13 15:14:57.775869 annotateRegions: annotating## 2024-12-13 15:14:57.793797 mergeResults: Saving fullAnnotatedRegions## 2024-12-13 15:14:57.79533 mergeResults: Saving fullFstats## 2024-12-13 15:14:57.829778 mergeResults: Saving fullTime

## Files created by mergeResults()

dir("analysisResults", pattern = ".Rdata")## [1] "fullAnnotatedRegions.Rdata" "fullFstats.Rdata"

## [3] "fullNullSummary.Rdata" "fullRegions.Rdata"

## [5] "fullTime.Rdata" "optionsMerge.Rdata"- fullFstats.Rdata contains a list with one element per chromosome. Per chromosome it has the F-statistics.

- fullNullSummary.Rdata is a list with the summary information from the null regions stored for each chromosome.

- fullTime.Rdata has the timing information for each chromosome as a list.

optionsMerge

For reproducibility purposes, the options used the merge the results

are stored in optionsMerge.

## Options used to merge

load(file.path("analysisResults", "optionsMerge.Rdata"))

## Contents

names(optionsMerge)## [1] "chrs" "significantCut" "minoverlap" "mergeCall"

## [5] "cutoffFstatUsed" "optionsStats"

## Merge call

optionsMerge$mergeCall## mergeResults(chrs = "chr21", prefix = "analysisResults", genomicState = genomicState$fullGenome,

## optionsStats = results$optionsStats)fullRegions

The main result from mergeResults() is in

fullRegions. This is a GRanges object with the

candidate DERs from all the chromosomes. It also includes the nearest

annotation metadata as well as FWER adjusted p-values (fwer)

and whether the FWER adjusted p-value is less than 0.05

(significantFWER).

## Load all the regions

load(file.path("analysisResults", "fullRegions.Rdata"))

## Metadata columns

names(mcols(fullRegions))## [1] "value" "area"

## [3] "indexStart" "indexEnd"

## [5] "cluster" "clusterL"

## [7] "meanCoverage" "meanfetal"

## [9] "meanadult" "log2FoldChangeadultvsfetal"

## [11] "pvalues" "significant"

## [13] "qvalues" "significantQval"

## [15] "name" "annotation"

## [17] "description" "region"

## [19] "distance" "subregion"

## [21] "insideDistance" "exonnumber"

## [23] "nexons" "UTR"

## [25] "annoStrand" "geneL"

## [27] "codingL" "Geneid"

## [29] "subjectHits" "fwer"

## [31] "significantFWER"Note that analyzeChr() only has the information for a

given chromosome at a time, so mergeResults() re-calculates

the p-values and q-values using the information from all the

chromosomes.

fullAnnotatedRegions

In preparation for visually exploring the results,

mergeResults() will run annotateRegions()

which counts how many known exons, introns and intergenic segments each

candidate DER overlaps (by default with a minimum overlap of 20bp).

annotateRegions() uses a summarized version of the genome

annotation created with makeGenomicState(). For this

example, we can use the data included in derfinder

which is the summarized annotation of hg19 for chromosome 21.

## Load annotateRegions() output

load(file.path("analysisResults", "fullAnnotatedRegions.Rdata"))

## Information stored

names(fullAnnotatedRegions)## [1] "countTable" "annotationList"

## Take a peak

lapply(fullAnnotatedRegions, head)## $countTable

## exon intergenic intron

## 1 1 0 0

## 2 1 0 0

## 3 1 0 0

## 4 1 0 0

## 5 0 1 0

## 6 1 0 0

##

## $annotationList

## GRangesList object of length 6:

## $`1`

## GRanges object with 1 range and 4 metadata columns:

## seqnames ranges strand | theRegion tx_id

## <Rle> <IRanges> <Rle> | <character> <IntegerList>

## 4871 chr21 47609038-47611149 - | exon 73448,73449,73450,...

## tx_name gene

## <CharacterList> <IntegerList>

## 4871 uc002zij.3,uc002zik.2,uc002zil.2,... 170

## -------

## seqinfo: 1 sequence from hg19 genome

##

## $`2`

## GRanges object with 1 range and 4 metadata columns:

## seqnames ranges strand | theRegion tx_id

## <Rle> <IRanges> <Rle> | <character> <IntegerList>

## 1189 chr21 40194598-40196878 + | exon 72757,72758

## tx_name gene

## <CharacterList> <IntegerList>

## 1189 uc002yxf.3,uc002yxg.4 77

## -------

## seqinfo: 1 sequence from hg19 genome

##

## $`3`

## GRanges object with 1 range and 4 metadata columns:

## seqnames ranges strand | theRegion tx_id

## <Rle> <IRanges> <Rle> | <character> <IntegerList>

## 2965 chr21 27252861-27254082 - | exon 73066,73067,73068,...

## tx_name gene

## <CharacterList> <IntegerList>

## 2965 uc002ylz.3,uc002yma.3,uc002ymb.3,... 139

## -------

## seqinfo: 1 sequence from hg19 genome

##

## ...

## <3 more elements>ChIP-seq differential binding

As of version 1.5.27 derfinder has parameters that allow smoothing of the single base-level F-statistics before determining DERs. This allows finding differentially bounded regions (peaks) using ChIP-seq data. In general, ChIP-seq studies are smaller than RNA-seq studies which means that the single base-level F-statistics approach is well suited for differential binding analysis.

To smooth the F-statistics use smooth = TRUE in

analyzeChr(). The default smoothing function is

bumphunter::locfitByCluster() and all its parameters can be

passed specified in the call to analyzeChr(). In

particular, the minNum and bpSpan arguments

are important. We recommend setting minNum to the minimum

read length and bpSpan to the average peak length expected

in the ChIP-seq data being analyzed. Smoothing the F-statistics will

take longer but not use significantly more memory than the default

behavior. So take this into account when choosing the number of

permutations to run.

Visually explore results

Optionally, we can use the addon package derfinderPlot to visually explore the results.

To make the region level plots, we will need to extract the region

level coverage data. We can do so using getRegionCoverage()

as shown below.

## Find overlaps between regions and summarized genomic annotation

annoRegs <- annotateRegions(fullRegions, genomicState$fullGenome)## 2024-12-13 15:14:58.221812 annotateRegions: counting## 2024-12-13 15:14:58.276871 annotateRegions: annotating

## Indeed, the result is the same because we only used chr21

identical(annoRegs, fullAnnotatedRegions)## [1] FALSE

## Get the region coverage

regionCov <- getRegionCoverage(fullCov, fullRegions)## 2024-12-13 15:14:58.351932 getRegionCoverage: processing chr21## 2024-12-13 15:14:58.422841 getRegionCoverage: done processing chr21

## Explore the result

head(regionCov[[1]])## HSB113 HSB123 HSB126 HSB130 HSB135 HSB136 HSB145 HSB153 HSB159 HSB178 HSB92

## 1 0.68 0.44 0.48 0.36 0.19 2.34 1.29 1.77 2.21 2.69 1.89

## 2 0.60 0.44 0.48 0.36 0.19 2.30 1.29 1.77 2.21 2.64 1.86

## 3 0.60 0.40 0.48 0.32 0.19 2.39 1.37 1.81 2.31 2.69 1.89

## 4 0.64 0.40 0.48 0.32 0.19 2.61 1.42 1.89 2.36 2.88 1.89

## 5 0.64 0.40 0.48 0.36 0.19 2.65 1.42 1.93 2.36 2.88 1.96

## 6 0.60 0.44 0.48 0.39 0.23 2.65 1.59 1.93 2.36 2.83 1.93

## HSB97

## 1 3.57

## 2 3.60

## 3 3.60

## 4 3.60

## 5 3.70

## 6 3.70With this, we are all set to visually explore the results.

library("derfinderPlot")

## Overview of the candidate DERs in the genome

plotOverview(

regions = fullRegions, annotation = results$annotation,

type = "fwer"

)

suppressPackageStartupMessages(library("TxDb.Hsapiens.UCSC.hg19.knownGene"))

txdb <- TxDb.Hsapiens.UCSC.hg19.knownGene

## Base-levle coverage plots for the first 10 regions

plotRegionCoverage(

regions = fullRegions, regionCoverage = regionCov,

groupInfo = pheno$group, nearestAnnotation = results$annotation,

annotatedRegions = annoRegs, whichRegions = 1:10, txdb = txdb, scalefac = 1,

ask = FALSE

)

## Cluster plot for the first region

plotCluster(

idx = 1, regions = fullRegions, annotation = results$annotation,

coverageInfo = fullCov$chr21, txdb = txdb, groupInfo = pheno$group,

titleUse = "fwer"

)The quick start to using derfinder has example plots for the expressed regions-level approach. The vignette for derfinderPlot has even more examples.

Interactive HTML reports

We have also developed an addon package called regionReport available via Bioconductor.

The function derfinderReport() in regionReport

basically takes advantage of the results from

mergeResults() and plotting functions available in derfinderPlot

as well as other neat features from knitrBootstrap.

It then generates a customized report for single-base level F-statistics

DER finding analyses.

For results from regionMatrix() or

railMatrix() use renderReport() from regionReport.

In both cases, the resulting HTML report promotes reproducibility of the

analysis and allows you to explore in more detail the results through

some diagnostic plots.

We think that these reports are very important when you are exploring

the resulting DERs after changing a key parameter in

analyzeChr(), regionMatrix() or

railMatrix().

Check out the vignette for regionReport for example reports generated with it.

Miscellaneous features

In this section we go over some other features of derfinder which can be useful for performing feature-counts based analyses, exploring the results, or exporting data.

Feature level analysis

Similar to the expressed region-level analysis, you might be

interested in performing a feature-level analysis. More specifically,

this means getting a count matrix at the exon-level (or gene-level).

coverageToExon() allows you to get such a matrix by taking

advantage of the summarized annotation produced by

makeGenomicState().

In this example, we use the genomic state included in the package which has the information for chr21 TxDb.Hsapiens.UCSC.hg19.knownGene annotation.

## Get the exon-level matrix

system.time(exonCov <- coverageToExon(fullCov, genomicState$fullGenome, L = 76))## class: SerialParam

## bpisup: FALSE; bpnworkers: 1; bptasks: 0; bpjobname: BPJOB

## bplog: FALSE; bpthreshold: INFO; bpstopOnError: TRUE

## bpRNGseed: ; bptimeout: NA; bpprogressbar: FALSE

## bpexportglobals: FALSE; bpexportvariables: FALSE; bpforceGC: FALSE

## bpfallback: FALSE

## bplogdir: NA

## bpresultdir: NA

## class: SerialParam

## bpisup: FALSE; bpnworkers: 1; bptasks: 0; bpjobname: BPJOB

## bplog: FALSE; bpthreshold: INFO; bpstopOnError: TRUE

## bpRNGseed: ; bptimeout: NA; bpprogressbar: FALSE

## bpexportglobals: FALSE; bpexportvariables: FALSE; bpforceGC: FALSE

## bpfallback: FALSE

## bplogdir: NA

## bpresultdir: NA## 2024-12-13 15:15:02.469954 coverageToExon: processing chromosome chr21## class: SerialParam

## bpisup: FALSE; bpnworkers: 1; bptasks: 0; bpjobname: BPJOB

## bplog: FALSE; bpthreshold: INFO; bpstopOnError: TRUE

## bpRNGseed: ; bptimeout: NA; bpprogressbar: FALSE

## bpexportglobals: FALSE; bpexportvariables: FALSE; bpforceGC: FALSE

## bpfallback: FALSE

## bplogdir: NA

## bpresultdir: NA## 2024-12-13 15:15:04.088645 coverageToExon: processing chromosome chr21## user system elapsed

## 4.823 0.100 4.923

## Dimensions of the matrix

dim(exonCov)## [1] 2658 12

## Explore a little bit

tail(exonCov)## HSB113 HSB123 HSB126 HSB130 HSB135 HSB136

## 4983 0.1173684 0.1382895 0.0000000 0.0000000 0.07894737 0.03947368

## 4985 4.6542105 1.4557895 1.6034210 0.7798684 1.29592103 0.72986842

## 4986 7.1510526 291.0205261 252.4892106 152.1905258 439.69500020 263.63486853

## 4988 0.0000000 0.7063158 0.7223684 0.2639474 0.48657895 0.37657895

## 4990 2.3064474 64.9423686 69.6584212 35.5769737 101.76394746 76.96736838

## 4992 0.1652632 8.1834211 9.7538158 2.4193421 10.08618420 14.41013157

## HSB145 HSB153 HSB159 HSB178 HSB92 HSB97

## 4983 5.263158e-03 0.1052632 0.04934211 0.1289474 0.03986842 0.44605264

## 4985 9.964474e-01 10.4906578 4.39013167 7.3119736 1.65184211 10.87723678

## 4986 2.345414e+02 4.5310526 10.64368415 4.9807895 11.40447366 6.23315790

## 4988 6.156579e-01 0.0000000 0.00000000 0.0000000 0.05644737 0.00000000

## 4990 5.978842e+01 1.2284210 2.67486840 0.9542105 3.98013159 1.96131579

## 4992 5.325658e+00 0.2402632 0.73039474 0.2601316 0.66907895 0.07473684With this matrix, rounded if necessary, you can proceed to use packages such as limma, DESeq2, edgeR among others.

Compare results visually

We can certainly make region-level plots using

plotRegionCoverage() or cluster plots using

plotCluster() or overview plots using

plotOveview(), all from derfinderPlot.

First we need to get the relevant annotation information.

## Annotate regions as exonic, intronic or intergenic

system.time(annoGenome <- annotateRegions(

regionMat$chr21$regions,

genomicState$fullGenome

))## 2024-12-13 15:15:05.268119 annotateRegions: counting## 2024-12-13 15:15:05.314728 annotateRegions: annotating## user system elapsed

## 0.115 0.004 0.119

## Note that the genomicState object included in derfinder only has information

## for chr21 (hg19).

## Identify closest genes to regions

suppressPackageStartupMessages(library("bumphunter"))

suppressPackageStartupMessages(library("TxDb.Hsapiens.UCSC.hg19.knownGene"))

txdb <- TxDb.Hsapiens.UCSC.hg19.knownGene

genes <- annotateTranscripts(txdb)## No annotationPackage supplied. Trying org.Hs.eg.db.## Loading required package: org.Hs.eg.db## Warning in library(package, lib.loc = lib.loc, character.only = TRUE,

## logical.return = TRUE, : there is no package called 'org.Hs.eg.db'## Could not load org.Hs.eg.db. Will continue without annotation## Getting TSS and TSE.## Getting CSS and CSE.## Getting exons.

system.time(annoNear <- matchGenes(regionMat$chr21$regions, genes))## user system elapsed

## 1.512 0.000 1.512Now we can proceed to use derfinderPlot to make the region-level plots for the top 100 regions.

## Identify the top regions by highest total coverage

top <- order(regionMat$chr21$regions$area, decreasing = TRUE)[1:100]

## Base-level plots for the top 100 regions with transcript information

library("derfinderPlot")

plotRegionCoverage(regionMat$chr21$regions,

regionCoverage = regionMat$chr21$bpCoverage,

groupInfo = pheno$group, nearestAnnotation = annoNear,

annotatedRegions = annoGenome, whichRegions = top, scalefac = 1,

txdb = txdb, ask = FALSE

)However, we can alternatively use epivizr to view the candidate DERs and the region matrix results in a genome browser.

## Load epivizr, it's available from Bioconductor

library("epivizr")

## Load data to your browser

mgr <- startEpiviz()

ders_dev <- mgr$addDevice(

fullRegions[as.logical(fullRegions$significantFWER)], "Candidate DERs"

)

ders_potential_dev <- mgr$addDevice(

fullRegions[!as.logical(fullRegions$significantFWER)], "Potential DERs"

)

regs_dev <- mgr$addDevice(regionMat$chr21$regions, "Region Matrix")

## Go to a place you like in the genome

mgr$navigate(

"chr21", start(regionMat$chr21$regions[top[1]]) - 100,

end(regionMat$chr21$regions[top[1]]) + 100

)

## Stop the navigation

mgr$stopServer()Export coverage to BigWig files

derfinder

also includes createBw() with related functions

createBwSample() and coerceGR() to export the

output of fullCoverage() to BigWig files. These functions

can be useful in the case where you start with BAM files and later on

want to save the coverage data into BigWig files, which are generally

smaller.

## Subset only the first sample

fullCovSmall <- lapply(fullCov, "[", 1)

## Export to BigWig

bw <- createBw(fullCovSmall)## 2024-12-13 15:15:11.252502 coerceGR: coercing sample HSB113## 2024-12-13 15:15:11.282411 createBwSample: exporting bw for sample HSB113

## See the file. Note that the sample name is used to name the file.

dir(pattern = ".bw")## [1] "HSB113.bw" "meanChr21.bw"

## Internally createBw() coerces each sample to a GRanges object before

## exporting to a BigWig file. If more than one sample was exported, the

## GRangesList would have more elements.

bw## GRangesList object of length 1:

## $HSB113

## GRanges object with 155950 ranges and 1 metadata column:

## seqnames ranges strand | score

## <Rle> <IRanges> <Rle> | <numeric>

## chr21 chr21 9458667-9458741 * | 0.04

## chr21 chr21 9540957-9540971 * | 0.04

## chr21 chr21 9543719-9543778 * | 0.04

## chr21 chr21 9651480-9651554 * | 0.04

## chr21 chr21 9653397-9653471 * | 0.04

## ... ... ... ... . ...

## chr21 chr21 48093246-48093255 * | 0.04

## chr21 chr21 48093257-48093331 * | 0.04

## chr21 chr21 48093350-48093424 * | 0.04

## chr21 chr21 48112194-48112268 * | 0.04

## chr21 chr21 48115056-48115130 * | 0.04

## -------

## seqinfo: 1 sequence from an unspecified genomeAdvanced arguments

If you are interested in using advanced arguments in derfinder, they are described in the manual pages of each function. Some of the most common advanced arguments are:

-

chrsStyle(default isUCSC) -

verbose(by defaultTRUE).

verbose controls whether to print status updates for

nearly all the functions. chrsStyle is used to determine

the chromosome naming style and is powered by GenomeInfoDb.

Note that chrsStyle is used in any of the functions that

call extendedMapSeqlevels(). If you are working with a

different organism than Homo sapiens set the global

species option using

options(species = 'your species') with the syntax used in

names(GenomeInfoDb::genomeStyles()). If you want to disable